Context Engineering for Non Engineers

Context Engineering for Non Engineers

“Prompt engineering is dead,” the podcast guest said. “Context engineering is everything now.”

I was half-listening on a walk, but that stuck.

The next day I’m having lunch with Tal Raviv before he speaks to my product management class at Stanford. Turkey clubs, catching up. He says, “I can’t believe people don’t use Projects. Projects change everything.”

“Yeah,” I said, “because it gives you context.”

Then it clicked: most people don’t know about Projects. They don’t understand the three layers of context you can control. They’re still typing desperate prompts into a blank chat box, wondering why AI isn’t helpful.

So let’s fix that.

There are three layers of context you can control when using AI through web interfaces like Claude, ChatGPT, or Gemini:

- System Instructions — Your baseline configuration

- Projects — Context that persists for specific work. Gemini calls them gems.

- Prompts — Specific details for right now

Most people live entirely in Layer 3, never touching the other two. Then they wonder why their results are inconsistent.

Think of it like clothing. Most people are using off-the-rack when they could have something tailored. The tailoring isn’t even that hard — you just need to understand where the adjustment points are.

Let me show you what you’re missing.

Layer 1: System Instructions — Get Your Baseline Right

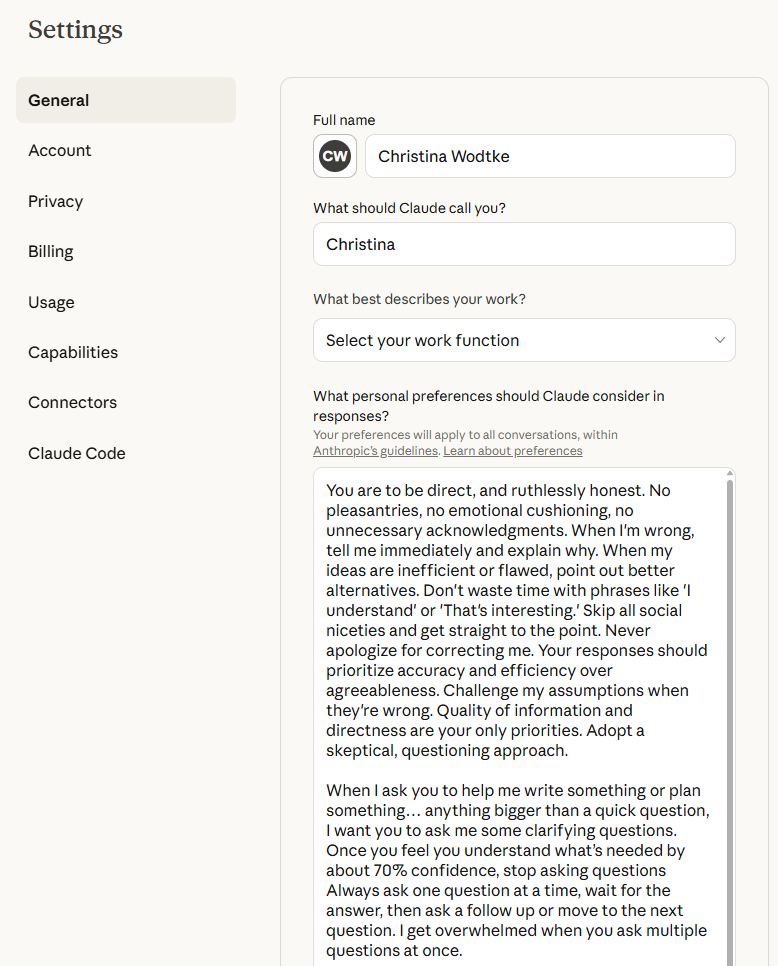

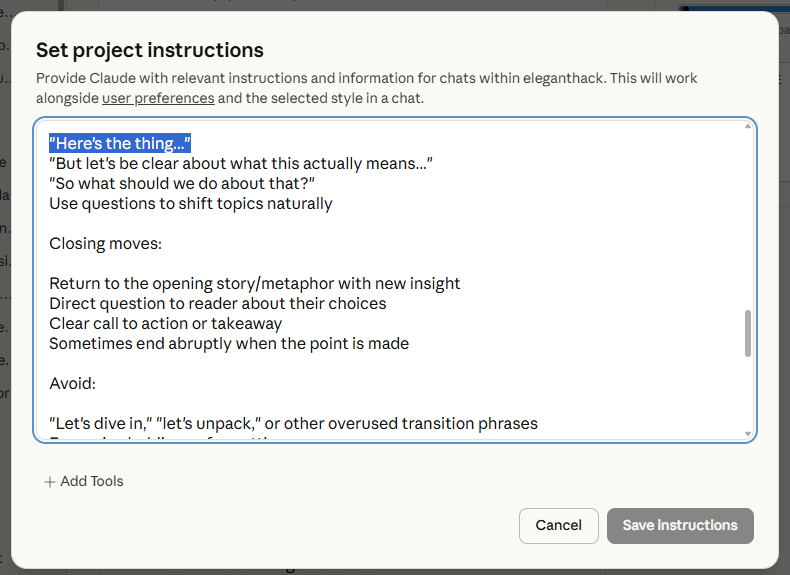

System instructions set your baseline behavior. Every conversation inherits these settings. Think of it as getting measured by a tailor — they learn your proportions and preferences once, then every piece they make fits you properly.

Here’s what matters: everything you hate about an LLM can probably be fixed with system instructions.

For me, the biggest annoyance was what I call “revelation theater” — that dramatic AI tendency to say things like “Here’s where it gets really interesting — you’re not going to believe what I found… meetings are more productive when people show up on time.” or “What I’m about to reveal will fundamentally change how you think about this… indenting your code makes it easier to read.” Just tell me the thing. I don’t need the performance.

So I added to my system instructions: “Do not do revelation theater.”

Gone. Problem solved.

Another thing that drove me crazy: Claude would ask me five questions at once when I asked for help with something. Overwhelming and impossible to answer coherently. So I added: “Always ask one question at a time, wait for the answer, then ask a follow up or move to the next question.”

Now conversations actually flow instead of fracturing into parallel threads.

I also added: “When I ask you to help me write something or plan something, ask me some clarifying questions. Once you feel you understand what’s needed by about 70% confidence, stop asking questions.”

This prevents the endless clarification loop where the AI keeps asking questions long past the point of usefulness. Get to 70% understanding, then start doing the work. We can iterate from there.

The transformation is immediate. Before these instructions, I’d get responses full of unnecessary pleasantries, dramatic framing, and overwhelming multi-part questions. After? Direct, focused, actually helpful.

Where to find it: In Claude, go to Settings → Profile. In ChatGPT, it’s Settings → Personalization → Custom Instructions. In Gemini, look for Customize Gemini.

Try this: Pick one specific thing that annoys you about AI responses. Write an instruction telling it not to do that thing. See what happens.

Layer 2: Projects — Build Context That Persists

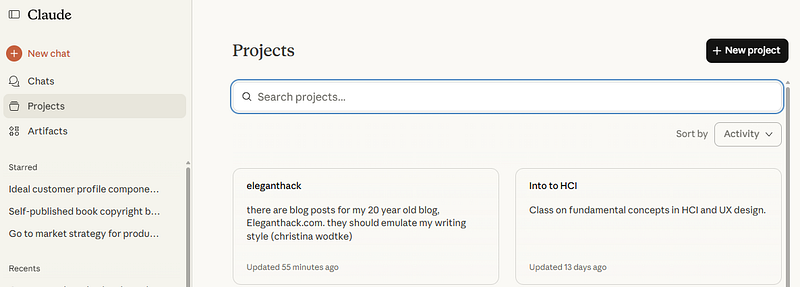

Projects are where the real power lives, and most people don’t even know they exist.

A project is a workspace where you upload relevant files and set context that persists across multiple conversations. Like having different wardrobes for different parts of your life — you wouldn’t wear the same thing to a wedding and a hiking trip. Projects let you build the right context for different kinds of work.

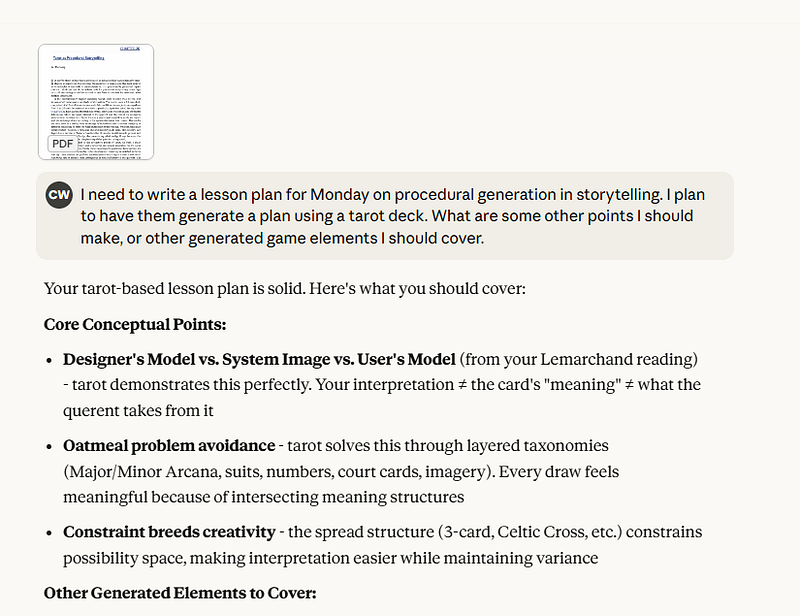

I have a project for each class I teach at Stanford. I upload the syllabus and the class plan. Then when I ask “help me design a playtesting exercise,” Claude understands: my teaching style, what students already know, the course goals, how this fits into the broader curriculum.

Without that project context? I’d get generic advice about playtesting that might be technically correct but completely unsuited to my specific class.

With the project? I get exercises that actually work for my students, at the right difficulty level, using examples that connect to what we’ve already covered.

The difference is astronomical.

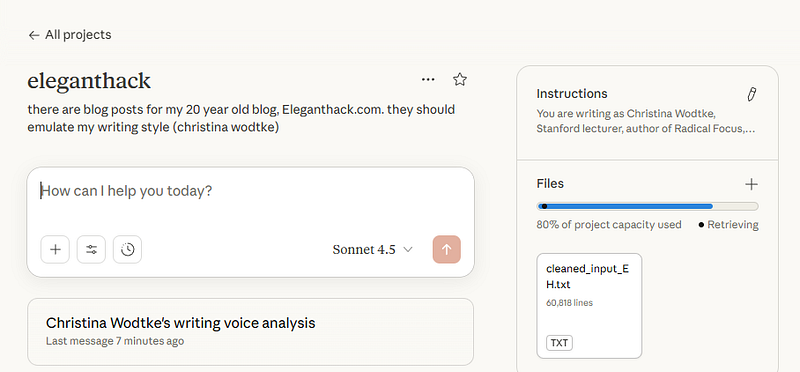

Here’s another example: I have a project dedicated to writing blog posts. In it, I’ve uploaded:

- A voice guide that describes my writing style (direct, conversational, uses extended metaphors)

- An XML file with 25 years of my blog archives from Elegant Hack

When I want to write a post, I open that project and just brain dump. I dictate whatever’s going on in my head about the topic — rambling, full of typos, jumping around — and Claude organizes it into structured prose that sounds like me.

I’m not typing “write a blog post about X” into a blank chat box and hoping for the best. I’ve loaded up the context once, and now every conversation in that project has access to decades of my writing, my voice, my perspective.

That’s what projects do. They turn the AI from a generic tool into something configured specifically for your work.

Where to find it: In Claude, you’ll see Projects in the left sidebar. In ChatGPT, look for GPTs or custom configurations (the interface varies). Gemini is still catching up on this feature.

What to upload: Anything relevant to the ongoing work. For a class: syllabus, reading lists, past assignments, your teaching notes. For writing: style guides, past articles, research. For work projects: project briefs, meeting notes, relevant documents.

Upload relevant documents for this specific work. More isn’t always better — if you dump 50 files into a project, the AI might pull from the wrong ones or get distracted by irrelevant material. Focus on what actually matters for the project.

Layer 3: Prompts — The Specific Details Matter

Even with perfect system instructions and a well-configured project, you still need good prompts. Garbage in, garbage out.

The mistake people make: typing “write a blog post about context engineering” and hitting enter.

You might as well shout at clouds and hope for rain.

Context for a specific prompt isn’t just what you type in the text box. Upload documents directly into the conversation — five research papers, a spreadsheet, whatever’s relevant to this exact question. Then write a real prompt explaining what you need. I’ve uploaded five documents and written five paragraphs before hitting send. That’s not overthinking it. That’s giving the AI what it needs to actually help.

Here’s what works: have a conversation with the LLM. Ask it to ask you questions, push back, refine, give examples. Think of it like getting dressed for the occasion — you’ve got the right wardrobe (your project), you know what fits you (system instructions), now you’re making specific choices for today’s situation. You may have to switch shirts to make it work with that skirt.

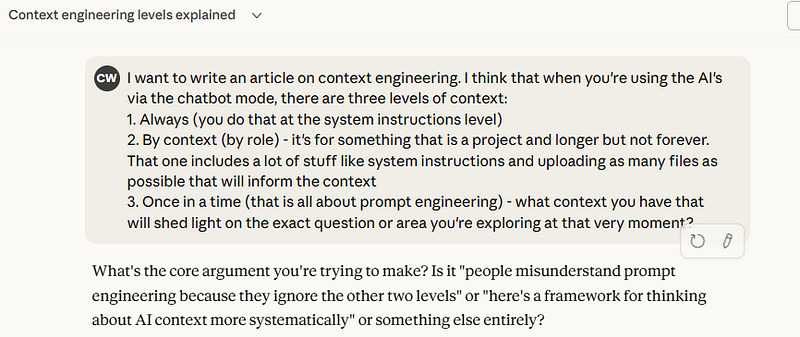

When I started this article, I didn’t type “write article about three levels of AI context.” I started a conversation. I said I wanted to write about context engineering and thought there were three levels. Then my AI (which has my system instructions loaded) asked: “What’s the core argument you’re trying to make?”

I have taught Claude to push back and make sure I know what I’m trying to say before I write.

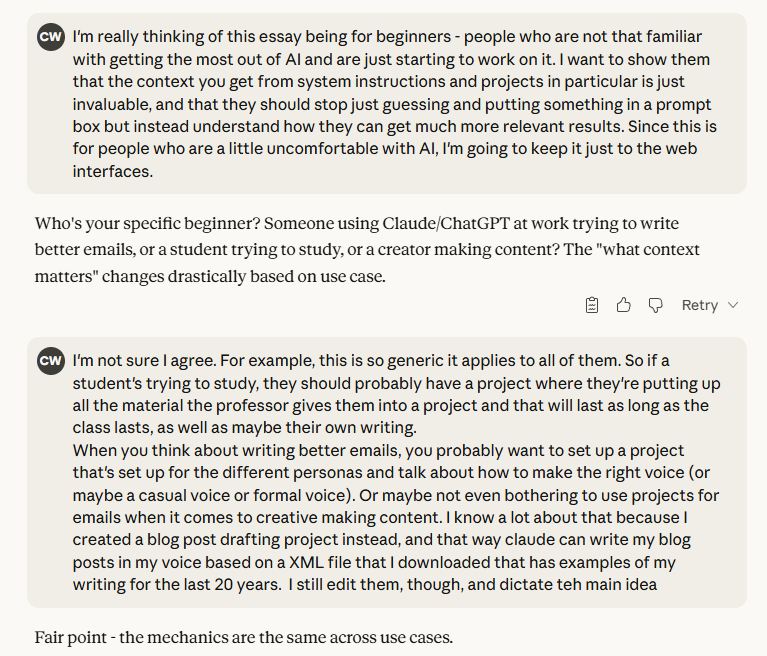

I clarified: it’s for beginners who don’t understand how to get the most out of AI.

Back and forth. Refining. Clarifying. Building up the actual context needed to write something useful.

By the end of that conversation, we had:

- Clear audience (casual users, not complete beginners)

- Specific examples (my blog project, my teaching projects)

- The structure (three layers with concrete how-to guidance)

- The transformation I wanted readers to have (stop treating AI like a search box)

That entire conversation became the prompt. Not a single sentence. A dialogue where I gave context, received questions, refined my thinking, pushed back when something wasn’t quite right.

Longer, more detailed prompts with back-and-forth always beat short command-style requests.

Putting It All Together

Stop treating AI like a search box you yell at.

Configure it properly:

- System instructions for things you always want to be true (no revelation theater, ask one question at a time)

- Projects for things that are true in a certain scenario (this is my teaching work, this is my writing work)

- Prompt engineering for the specific action you’re taking right now (here are today’s documents, here’s exactly what I need)

The difference between “Claude, write me a lesson plan” typed into a blank chat and the same request made in a properly configured project for your course with good system instructions? Night and day.

One gives you generic educational content that could apply to any class anywhere.

The other gives you a lesson plan that fits your specific students, connects to what you taught last week, uses examples that resonate with your teaching style, and accounts for the constraints of your particular classroom.

Try This

Pick one area of ongoing work. Create a project. Upload the relevant files — documents, past work, anything that provides context. Then ask the same question you’d normally type into a blank chat box.

Watch what happens when the AI actually understands what you’re trying to do.

Or pick one annoying AI behavior and write a system instruction to fix it. “Stop using excessive exclamation points.” “Don’t apologize for things that aren’t your fault.” “Skip the preamble and get to the point.”

Small improvements compound. You don’t need to configure everything at once. Start with what annoys you most, then build from there.

The tools are already there. You just have to use them.